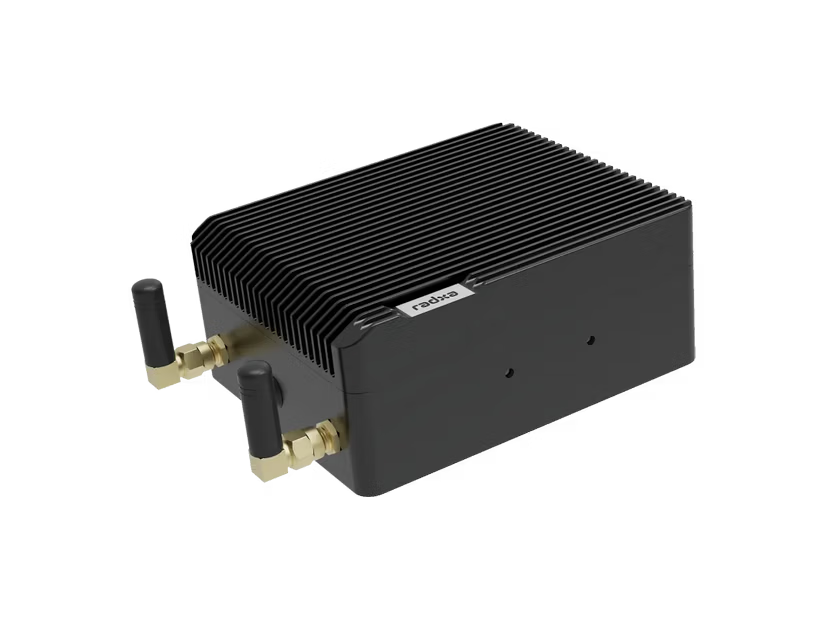

Radxa (Fogwise®) announced the official release of "Fogwise AIRbox Q900" on Sep 30, 2025. Powered by Qualcomm Dragonwing IQ-9075 with max 200 TOPS (INT8 sparse) NPU, 36GB ECC memory, and dual 2.5GbE, it targets on-site inference needs.

Radxa (Fogwise®) announced the official release of the Fogwise AIRbox Q900 on September 30, 2025. Product info is available on the official page and docs. Powered by Qualcomm Dragonwing™ IQ-9075, this edge AI box boasts a max NPU performance of 200 TOPS (INT8 sparse). It hits the spot for requirements like "Running AI inference on-site without cloud" and "Rugged box with dual 2.5GbE and SIM support". However, be aware that optimization for Qualcomm toolchains (QAIRT, etc.) is a prerequisite.

Specs

| ■ Fogwise AIRbox Q900 | |

|---|---|

| Processor | Qualcomm Dragonwing™ IQ-9075 (Kryo Gen6 8-core Max 2.36GHz) + Cortex-R52 4-core (Max 1.85GHz) |

| GPU | Adreno 663 (Max 1.2 TFLOPS FP32) |

| NPU | Dual Hexagon NPU (Max 200 TOPS@INT8 sparse) |

| Memory | 36GB LPDDR5 (96-bit, 6400MT/s, ECC) |

| Storage | 128GB UFS 3.1 (Onboard) + 32MB SPI Flash |

| Expansion | M.2 M Key 2230 (PCIe Gen4 x4), Mini PCIe (Wi-Fi/4G/5G), Nano SIM, UFS/eMMC module |

| Network | Wired: 2x 2.5GbE (TSN), Wireless: Wi-Fi 6/BT 5.4 via Mini PCIe, or 4G/5G + Nano SIM |

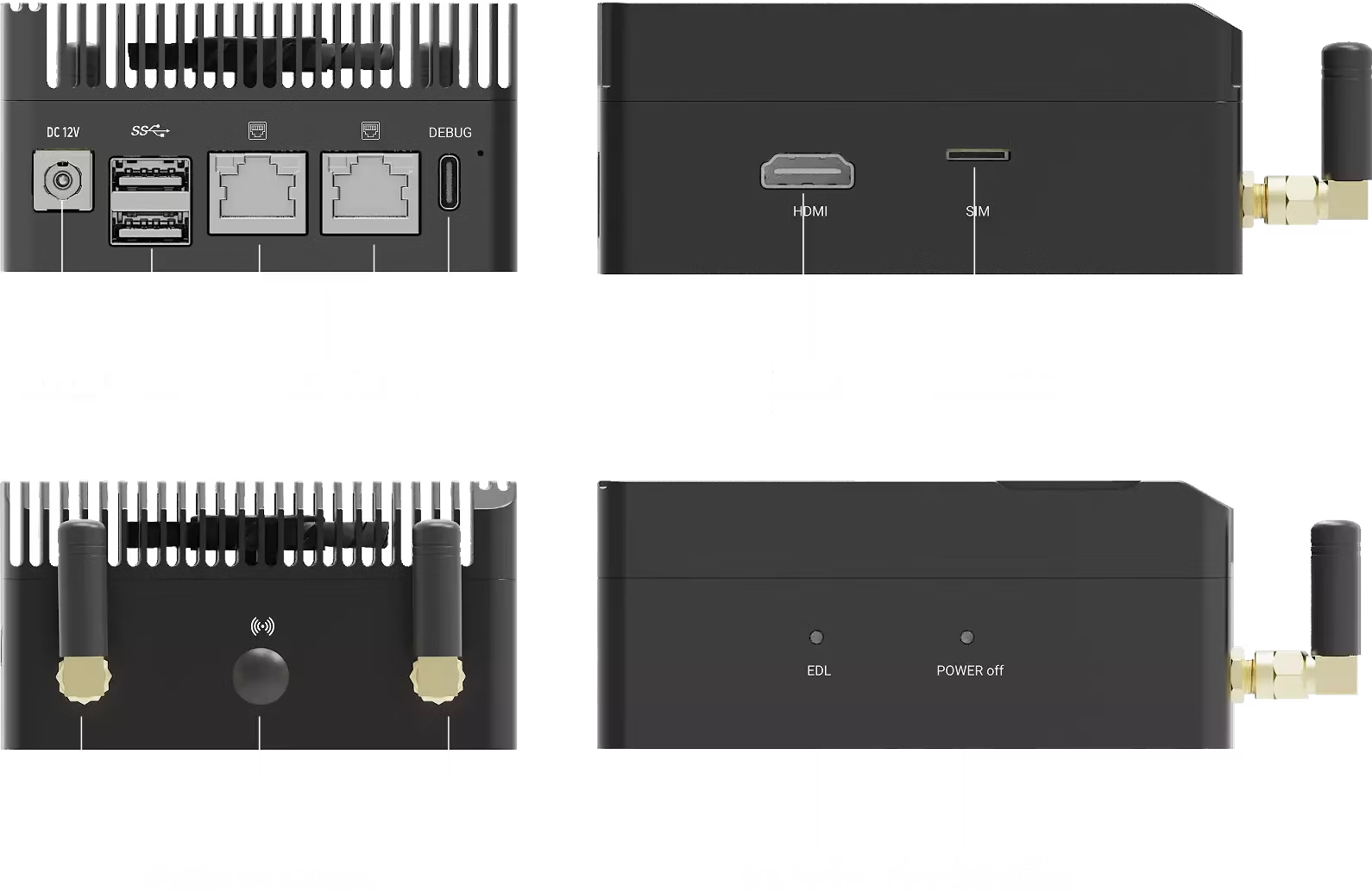

| Video | HDMI 2.0 (Max 4K@60) x1 |

| USB | USB 3.1 Gen2 Type-A HOST x1, OTG x1, Type-C (Console/Debug) |

| Size | 104x84x45mm |

| Power | DC 12V Input (Recommended 12V/5.4A) |

| OS | Ubuntu, Yocto support. RadxaOS (Debian/Ubuntu based) docs available |

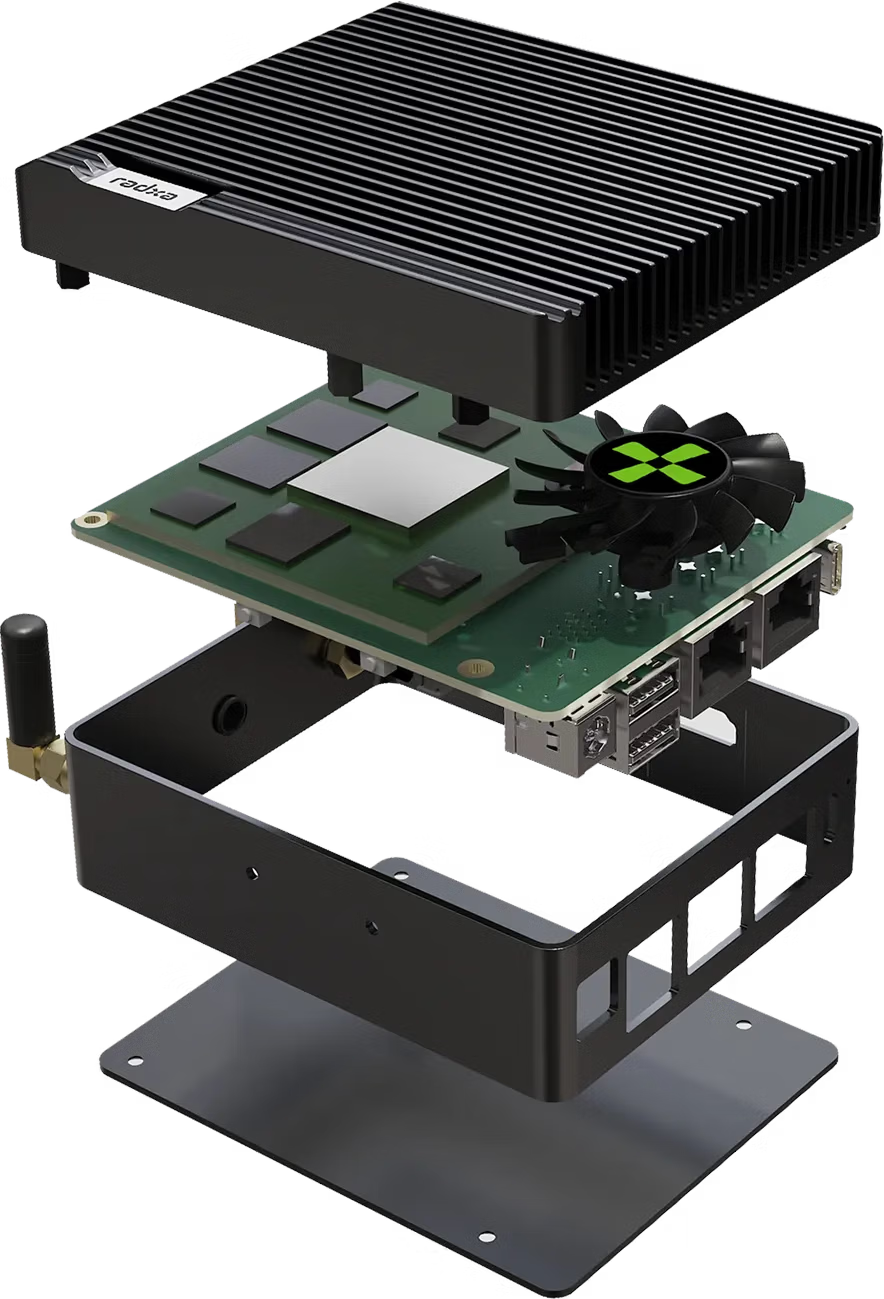

Dual Hexagon NPU with max 200 TOPS (INT8 sparse) + 36GB ECC memory packed into a 104x84x45mm metal chassis with dual 2.5GbE and SIM support. Weight is unconfirmed. Quick Start guide notes "Prepare 12V DC power, HDMI cable, Ethernet, etc."

Features

Processor and NPU

The IQ-9075 positioning is interesting. While an extension of mobile SoCs, here it's strictly a "field box" utilizing CPU, GPU, and NPU together. The max 200 TOPS (INT8 sparse) Dual Hexagon NPU is aimed at completing inference on the device.

Note that this is a different world from NVIDIA Jetson's "CUDA simplicity". Optimization for Qualcomm toolchains (QAIRT, etc.) is expected, so assess difficulty based on your existing assets.

Memory & Storage

36GB LPDDR5 + ECC. This is a highlight. For 24/7 edge use and fluctuating temps, ECC adds significant value. Standard storage is onboard 128GB UFS 3.1, with an M.2 2230 NVMe (PCIe Gen4 x4) slot for expansion. Official guides cover user installation (Power off -> Insert -> Screw), which is reassuring. Ideally, keep the OS on UFS and use NVMe for "logs, datasets, models". Great for surveillance metadata, factory line logs, or local LLM caches.

GenAI Performance

Official pages explicitly mention running local GPT, LLaMA, and Stable Diffusion. Example: LLaMA-7B around 0.6s first token, then ~12 tok/s.

Don't judge solely by "200 TOPS". Performance varies with model quantization, memory, and thermals. Uses include "Lightweight LLM field response", "Image classification/detection", and "Simple generation (verification/demo)".

Exterior

Ports are practical: DC12V, USB, 2x 2.5GbE, Type-C (Console/Debug), HDMI 2.0, SIM slot, EDL button. "Debug-ready Type-C" and "EDL access" are crucial for mass deployment.

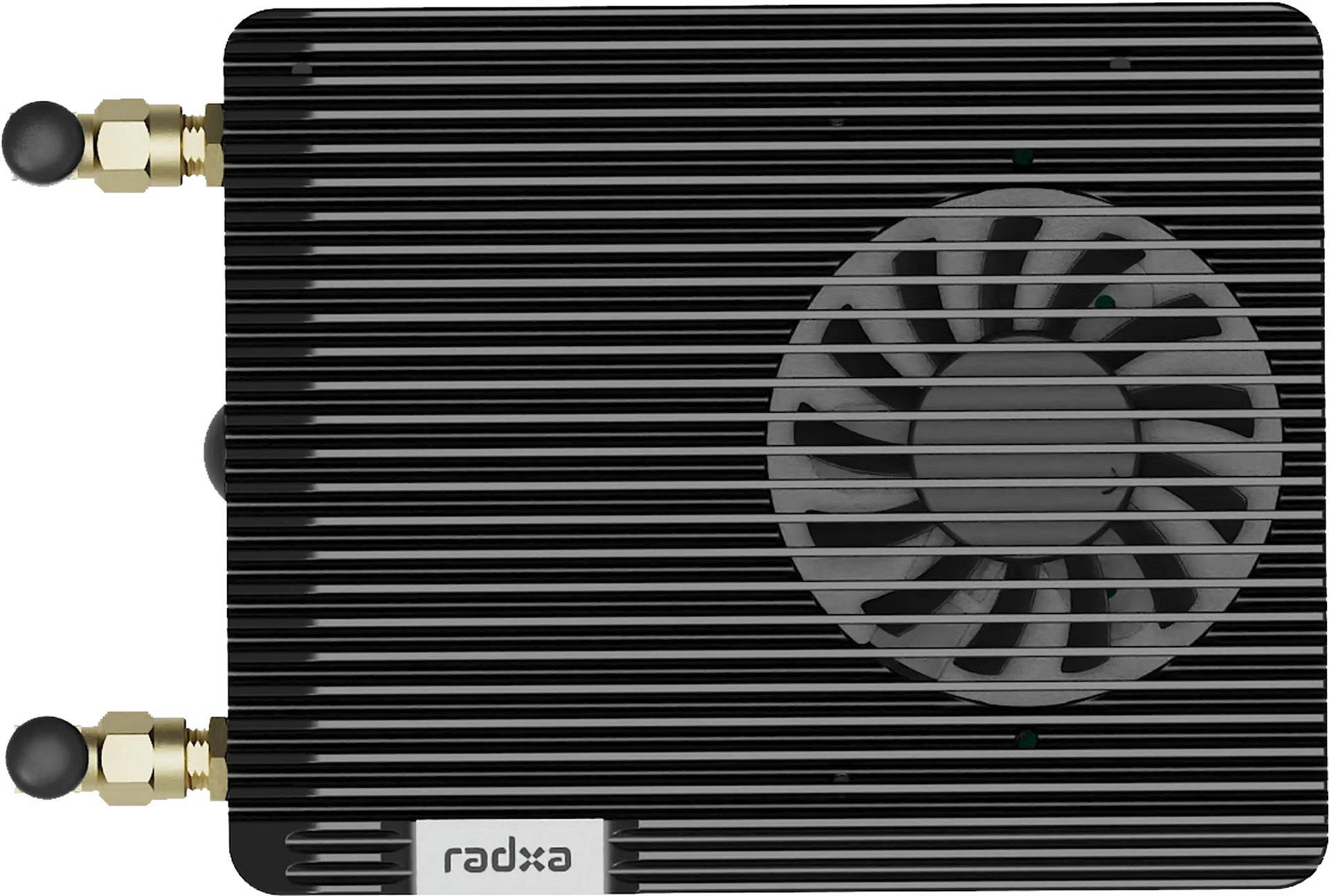

Top view shows fan placement and fins. Not relying entirely on software for cooling is good practice for AI boxes susceptible to thermal throttling.

Others

Network: 2.5GbE x2 (TSN) is highly practical, offering bandwidth and network segmentation (e.g., Upstream vs. Device). Wireless via Mini PCIe allows choice of Wi-Fi 6/BT 5.4 or 4G/5G modules. Nano SIM slot confirmed.

Power/Cooling: DC 12V (Rec 12V/5.4A). Typical power approx. 20W (Official blog). Metal chassis + PWM fan is a solid cooling approach for 24/7 use.

Summary

Fogwise AIRbox Q900 is exactly what it looks like: a "Box to run AI on-site". 200 TOPS NPU, 36GB ECC, UFS+NVMe, dual 2.5GbE, SIM support – all the common edge requirements. Suited for: On-site inference users, those needing rugged dual 2.5GbE/SIM boxes. Not suited for: Direct CUDA asset porting, "one-click" generation speedsters. Requires Qualcomm ecosystem alignment.

Personally, I appreciate the "Proper I/O and operational design" over raw specs.

| Retailer | Price (Ref) |

|---|---|

| Arace Tech | Check site (varies) |

Related Links

- Official Product Page (Radxa): https://radxa.com/products/fogwise/airbox-q900/

- Official Docs: https://docs.radxa.com/en/fogwise/airbox-q900

- Official Release (Radxa Blog): https://www.radxa.com/blog/fogwise-airbox-q900-official-release/

- Docs (M.2 2230): https://docs.radxa.com/en/fogwise/airbox-q900/hardware-design/nvme

- Docs (Mini PCIe): https://docs.radxa.com/en/fogwise/airbox-q900/hardware-design/mini-pcie

- Price/SKU (Arace Tech): https://arace.tech/products/fogwise-airbox-q900